Proprioceptively Displayed Interfaces: aiding non-visual on-body input through active and passive TOUCH

My dissertation work has been published as a journal article Proprioceptively displayed interfaces: aiding non-visual on-body input through active and passive touch in Personal and Ubiquitous Computing

Abstract

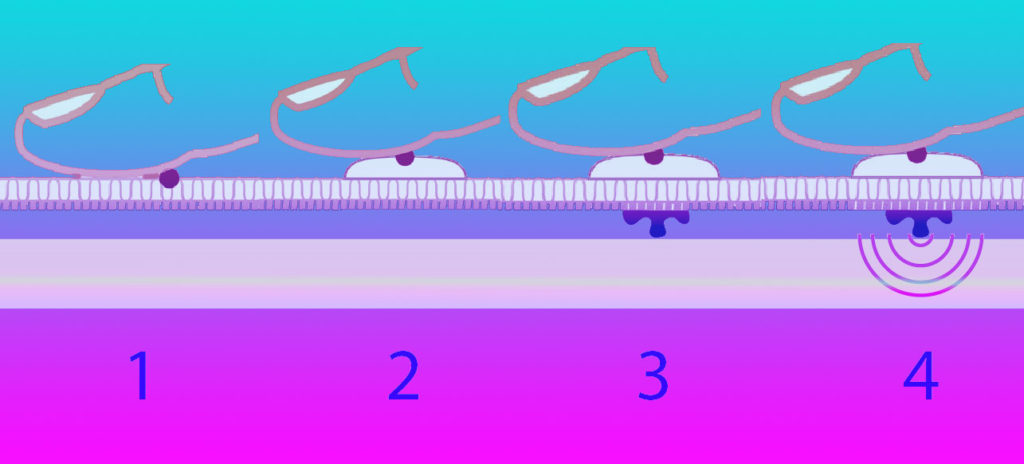

On-body input interfaces that can be used accurately without visual attention could have a wide range of applications where vision is needed for a primary task: emergency responders, pilots, astronauts, and people with vision impairments could benefit by making interfaces accessible. This paper describes a between-participant study (104 participants) to determine how well users can locate e-textile interface discrete target touch points on the forearm without visual attention. We examine whether the addition of active touch embroidery and passive touch nubs (metal snaps with vibro-tactile stimulation) helps in locating input touch points accurately. We found that touch points towards the middle of the interface on the forearm were more difficult to touch accurately than at the ends. We also found that the addition of vibro-tactile stimulation aids in the accuracy of touch interactions by over 9% on average, and by almost 17% in the middle of the interface.